OLMoE and the hidden simplicity in training better foundation models

Interconnects - A podcast by Nathan Lambert

Categories:

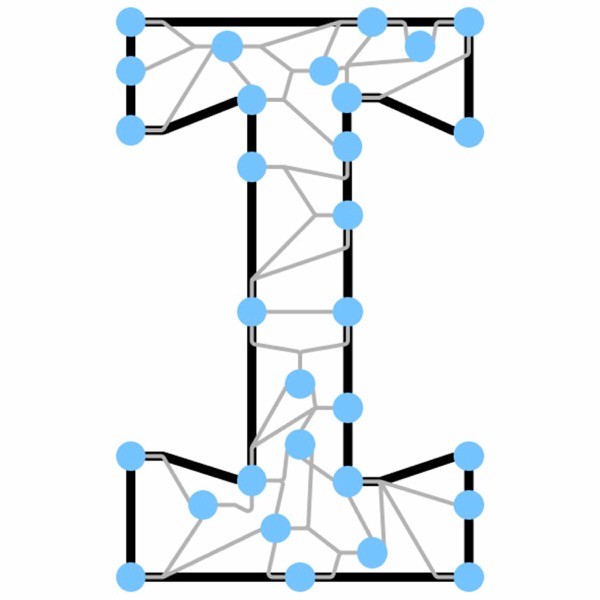

Ai2 released OLMoE, which is probably our "best" model yet relative to its peers, but not much has changed in the process.This is AI generated audio with Python and 11Labs.Source code: https://github.com/natolambert/interconnects-toolsOriginal post: https://www.interconnects.ai/p/olmoe-and-building-better-llms00:00 OLMoE and the hidden simplicity in training better foundation models02:04 Frontier model team compute allocations04:19 De-risking training complexity06:40 On organizational complexity09:05 Compounding improvements -- the key to building better language modelsFig 1: https://huggingface.co/datasets/natolambert/interconnects-figures/resolve/main/olmoe/img_005.pngFig 2: https://huggingface.co/datasets/natolambert/interconnects-figures/resolve/main/olmoe/img_007.pngFig 3: https://huggingface.co/datasets/natolambert/interconnects-figures/resolve/main/olmoe/img_009.pngFig 4: https://huggingface.co/datasets/natolambert/interconnects-figures/resolve/main/olmoe/img_011.pngFig 5: https://huggingface.co/datasets/natolambert/interconnects-figures/resolve/main/olmoe/img_028.pngFig 6: https://huggingface.co/datasets/natolambert/interconnects-figures/resolve/main/olmoe/img_030.pngFig 7: https://huggingface.co/datasets/natolambert/interconnects-figures/resolve/main/olmoe/img_032.png This is a public episode. If you'd like to discuss this with other subscribers or get access to bonus episodes, visit www.interconnects.ai/subscribe